Alert – Long post!

In this post, I’m presenting a summary of my review on tools for automatically analyzing rhetorical structures from academic writing.

The tools considered are designed to cater to different users and purposes. AWA and RWT aim to provide feedback for improving students’ academic writing. Mover and SAPIENTA on the other hand, are to help researchers identify the structure of research articles. ‘Mover’ even allows users to give a second opinion on the classification of moves and add new training data (This can lead to a less accurate model if students with less expertise add potentially wrong training data). However, these tools have a common thread and fulfill the following criteria:

- They look at scientific text – Full research articles, abstracts or introductions. Tools to automate argumentative zoning of other open text (Example) are not considered.

- They automate the identification of rhetorical structures (zones, moves) in research articles (RA) with sentence being the unit of analysis.

- They are broadly based on the Argumentative Zoning scheme by Simone Teufel or the CARS model by John Swales (Either the original schema or modified version of it).

Tools (in alphabetical order):

- Academic Writing Analytics (AWA) – Summary notes here

AWA also has a reflective parser to give feedback on students’ reflective writing, but the focus of this post is on the analytical parser. AWA demo, video courtesy of Dr. Simon Knight:

Available for download as a stand alone application. Sample screenshot below:

- Research Writing Tutor (RWT) – Summary notes here

RWT demo, video courtesy of Dr. Elena Cotos:

Available for download as a stand alone java application or can be accessed as a web service. Sample screenshot of tagged output from SAPIENTA web service below:

The general aim of the schemes used is to be applicable to all academic writing and this has been successfully tested across data from different disciplines. A comparison of the schemes used by the tools is shown in the below table:

| Tool | Source & Description | Annotation Scheme |

|---|---|---|

| AWA | AWA Analytical scheme (Modified from AZ for sentence level parsing) | -Summarizing -Background knowledge -Contrasting ideas -Novelty -Significance -Surprise -Open question -Generalizing |

| Mover | Modified CARS model -three main moves and further steps | 1. Establish a territory -Claim centrality -Generalize topics -Review previous research 2. Establish a niche -Counter claim -Indicate a gap -Raise questions -Continue a tradition 3. Occupy the niche -Outline purpose -Announce research -Announce findings -Evaluate research -Indicate RA structure |

| RWT | Modified CARS model -3 moves, 17 steps | Move 1. Establishing a territory -1. Claiming centrality -2. Making topic generalizations -3. Reviewing previous research Move 2. Identifying a niche -4. Indicating a gap -5. Highlighting a problem -6. Raising general questions -7. Proposing general hypotheses -8. Presenting a justification Move 3. Addressing the niche -9. Introducing present research descriptively -10. Introducing present research purposefully -11. Presenting research questions -12. Presenting research hypotheses -13. Clarifying definitions -14. Summarizing methods -15. Announcing principal outcomes -16. Stating the value of the present research -17. Outlining the structure of the paper |

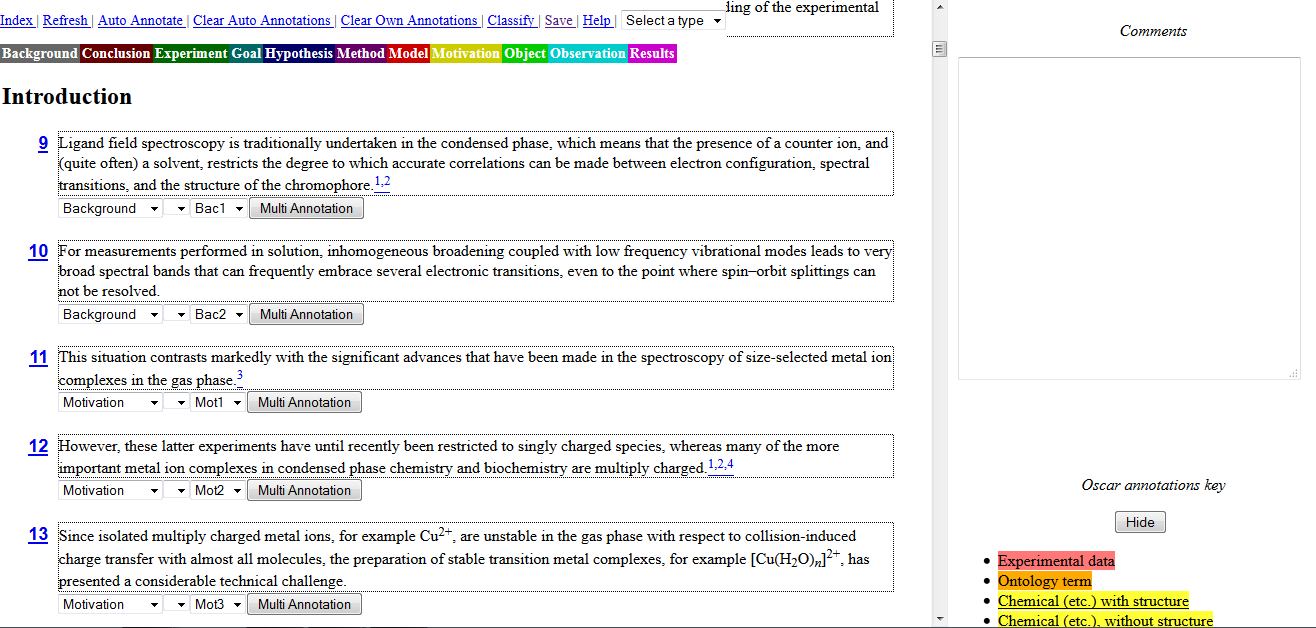

| SAPIENTA | finer grained AZ scheme -CoreSC scheme with 11 categories in the first layer | -Background (BAC) -Hypothesis (HYP) -Motivation (MOT) -Goal (GOA) -Object (OBJ) -Method (MET) -Model (MOD) -Experiment (EXP) -Observation (OBS) -Result (RES) -Conclusion (CON) |

Method:

The tools are built on different data sets and methods for automating the analysis. Most of them use manually annotated data as a standard for training the model to automatically classify the categories. Details below:

| Tool | Data type | Automation method |

|---|---|---|

| AWA | Any research writing | NLP rule based - Xerox Incremental Parser (XIP) to annotate rhetorical functions in discourse. |

| Mover | Abstracts | Supervised learning - Naïve Bayes classifier with data represented as bag of clusters with location information. |

| RWT | Introductions | Supervised learning using Support Vector Machine (SVM) with n-dimensional vector representation and n-gram features. |

| SAPIENTA | Full article | Supervised learning using SVM with sentence aspect features and Sequence Labelling using Conditional Random Fields (CRF) for sentence dependencies. |

Others:

- SciPo tool helps students write summaries and introductions for scientific texts in Portuguese.

- Another tool CARE is a word concordancer used to search for words and moves from research abstracts- Summary notes here.

- A ML approach considering three different schemes for annotating scientific abstracts (No tool).

If you think I’ve missed a tool which does similar automated tagging in research articles, do let me know so I can include it in my list 🙂