Generative AI (GenAI) has captured global attention since ChatGPT was publicly released in November 2022. The remarkable capabilities of AI have sparked a myriad of discussions around its vast potential, ethical considerations, and transformative impact across diverse sectors, including education. In particular, how humans can learn to work with AI to augment their intelligence rather than undermine it greatly interests many communities.

My own interest in writing research led me to explore human-AI partnerships for writing. We are not very far from using generative AI technologies in everyday writing when co-pilots become the norm rather than an exception. It is possible that a ubiquitous tool like Microsoft Word that many use as their preferred platform for digital writing comes with AI support as an essential feature (and early research shows how people are imagining these) for improved productivity. But at what cost?

In our recent full paper, we explored an analytic approach to study writers’ support seeking behaviour and dependence on AI in a co-writing environment:

Antonette Shibani, Ratnavel Rajalakshmi, Srivarshan Selvaraj, Faerie Mattins, Simon Knight (2023). Visual representation of co-authorship with GPT-3: Studying human-machine interaction for effective writing. In M. Feng, T. K¨aser, and P. Talukdar, editors, Proceedings of the 16th International Conference on Educational Data Mining, pages 183–193, Bengaluru, India, July 2023. International Educational Data Mining Society [PDF].

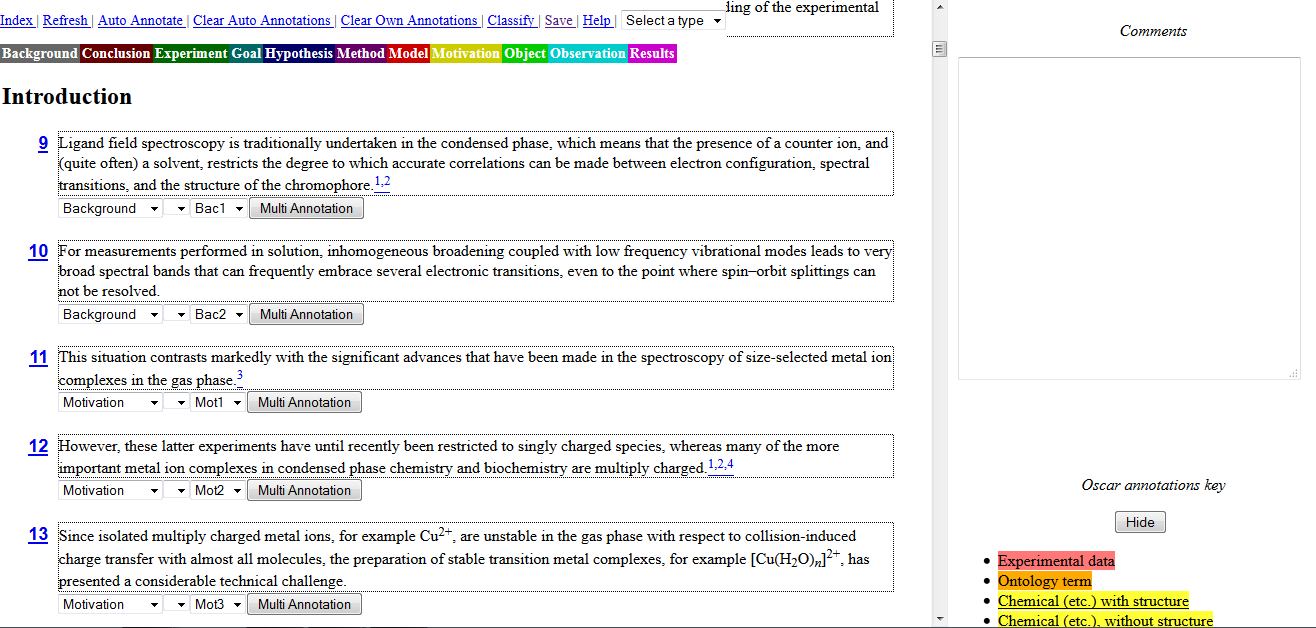

Using keystroke data from the interactive writing environment CoAuthor powered by GPT-3, we developed CoAuthorViz (See example figure below) to characterize writer interaction with AI feedback. ‘CoAuthorViz’ captured key constructs such as the writer incorporating a GPT-3 suggested text as is (GPT-3 suggestion selection), the writer not incorporating a GPT-3 suggestion

(Empty GPT-3 call), the writer modifying the suggested text (GPT-3 suggestion modification), and the writer’s own writing (user text addition). We demonstrated how such visualizations (and associated metrics) help characterise varied levels of AI interaction in writing from low to high dependency on AI.

Figure: CoAuthorViz legend and three samples of AI-assisted writing (squares denote writer written text, and triangles denote AI suggested text)

Full details of the work can be found in the resources below:

- Published paper: PDF

- Presentation slides: PPT

- Open-source code: Github link

Several complex questions are yet to be answered:

- Is autonomy (self-writing, without AI support) preferable to better quality writing (with AI support)?

- As AI becomes embedded into our everyday writing, do we lose our own writing skills? And if so, is that of concern, or will writing become one of those outdated skills in the future that AI can do much better than humans?

- Do we lose our ‘uniquely human’ attributes if we continue to write with AI?

- What is an acceptable use of AI in writing that still lets you think? (We know by writing we think more clearly; would an AI tool providing the first draft restrict our thinking?)

- What knowledge and skills do writers need to use AI tools appropriately?

Edit: If you want to delve into the topic further, here’s an intriguing article that imagines how writing might look in the future: https://simon.buckinghamshum.net/2023/03/the-writing-synth-hypothesis/